Today I drafted a module that mixes ZODB with Protocol Buffers. It’s looking good! I was hoping not to use metaclasses, but metaclasses solved a lot of problems, so I’ll keep them unless they cause deeper problems.

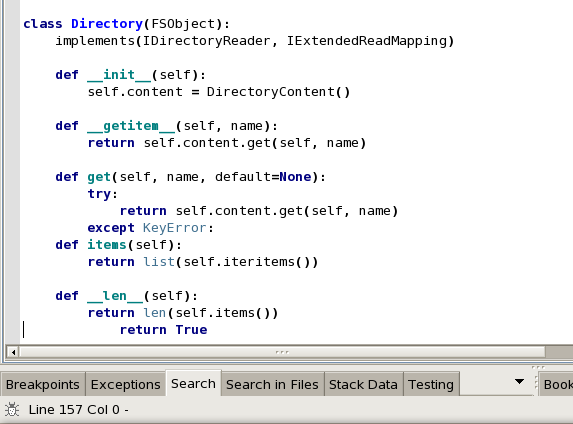

The code so far lets you create a class like this:

class Tower(Persistent):

__metaclass__ = ProtobufState

protobuf_type = Tower_pb

def __init__(self):

self.name = "Rapunzel's"

self.height = 1000.0

self.width = 10.0

def __str__(self):

return '%s %fx%f %d' % (

self.name, self.height, self.width, self.build_year)

The only special lines are the second and third. The second line says the class should use a metaclass that causes all persistent state to be stored in a protobuf message. The third line says which protobuf class to use. The protobuf class comes from a generated Python module (not shown).

If you try to store a string in the height attribute, Google’s code raises an error immediately, which is probably a nice new benefit. Also, this Python code does not have to duplicate the content of the .proto file. Did you notice the build_year attribute? Nothing sets it, but since it’s defined in the message schema, it’s possible to read the default value at any time. Here is the message schema, by the way:

message Tower {

required string name = 1;

required float width = 2;

required float height = 3;

optional int32 build_year = 4 [default = 1900];

}

The metaclass creates a property for every field defined in the protobuf message schema. Each property delegates the storage of an attribute to a message field. The metaclass also adds __getstate__, __setstate__, and __new__ methods to the class; these are used by the pickle module. Since ZODB uses the pickle module, the serialization of these objects in the database is now primarily a protobuf message. Yay! I accomplished all of this with only about 100 lines of code, including comments. 🙂

I have a solution for subclassing that’s not beautiful, but workable. Next, I need to work on persistent references and _p_changed notification. Once I have those in place, I intend to release a pre-alpha and change ZODB to remove the pickle wrapper around database objects that are protobuf messages. At that point, when combined with RelStorage, ZODB will finally store data in a language independent format.

As a matter of principle, important data should never be tied to any particular programming language. Still, the fact that so many people use ZODB even without language independence is a testament to how good ZODB is.

Jonathan Ellis pointed out Thrift, an alternative to protobuf. Thrift looks like it could be good, but my customer is already writing protobuf code, so I’m going to stick with that for now. I suspect almost everything I’m doing will be be applicable to Thrift anyway.

I know what some of you must be thinking: what about storing everything in JSON like CouchDB? I’m sure it could be done, but I don’t yet see a benefit. Maybe someone will clue me in.