I have been planning to compare mod_wsgi with paste.httpserver, which Zope 3 uses by default. I guessed the improvement would be small since parsing HTTP isn’t exactly computationally intensive. Today I finally had a good chance to perform the test on a new linode virtual host.

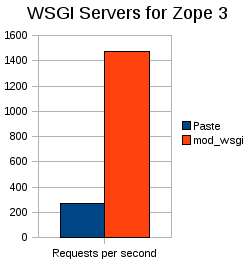

The difference blew me away. I couldn’t believe it at first, so I double-checked everything. The results came out about the same every time, though:

I used the ab command to run this test, like so:

ab -n 1000 -c 8 http://localhost/

The requests are directed at a simple Zope page template with no dynamic content (yet), but the Zope publisher, security, and component architecture are all deeply involved. The Paste HTTP server handles up to 276 requests per second, while a simple configuration of mod_wsgi handles up to 1476 per second. Apparently, Graham‘s beautiful Apache module is over 5 times as fast for this workload. Amazing!

Well, admittedly, no… it’s not actually amazing. I ran this test on a Xen guest that has access to 4 cores. I configured mod_wsgi to run 4 processes, each with 1 thread. This mod_wsgi configuration has no lock contention. The Paste HTTP server lets you run multiple threads, but not multiple processes, leading to enormous contention for Python’s global interpreter lock. The Paste HTTP server is easier to get running, but it’s clearly not intended to compete with the likes of mod_wsgi for production use.

I confirmed this explanation by running “ab -n 1000 -c 1 http://localhost/”; in this case, both servers handled just under 400 requests per second. Clearly, running multiple processes is a much better idea than running multiple threads, and with mod_wsgi, running multiple processes is now easy. My instance of Zope 3 is running RelStorage 1.1.3 on MySQL. (This also confirms that the MySQL connector in RelStorage can poll the database at least 1476 times per second. That’s good to know, although even higher speeds should be attainable by enabling the memcached integration.)

I mostly followed the repoze.grok on mod_wsgi tutorial, except that I used zopeproject instead of Repoze or Grok. The key ingredient is the WSGI script that hits my Zope application to handle requests. Here is my WSGI script (sanitized):

# set up sys.path.

code = open('/opt/myapp/bin/myapp-ctl').read()

exec code

# load the app

from paste.deploy import loadapp

zope_app = loadapp('config:/opt/myapp/deploy.ini')

def application(environ, start_response):

# translate the path

path = environ['PATH_INFO']

host = environ['SERVER_NAME']

port = environ['SERVER_PORT']

scheme = environ['wsgi.url_scheme']

environ['PATH_INFO'] = (

'/myapp/++vh++%s:%s:%s/++%s' % (scheme, host, port, path))

# call Zope

return zope_app(environ, start_response)

This script is mostly trivial, except that it modifies the PATH_INFO variable to map the root URL to a folder inside Zope. I’m sure the same path translation is possible with Apache rewrite rules, but this way is easier, I think.